[논문리뷰]

ABSTRACT

- Deep Convolutional neural networks는 지난 몇 년동안 다양한 분야에서 발전을 가능하게 했다.

- Deep Convolutional neural networks은 많은 매개변수와 float operation으로 인해 여전히 어려운 과제로 남아있다.

- 따라서 이러한 Convolutional neural network의 Pruning작업에 관심이 높아지고 있다.

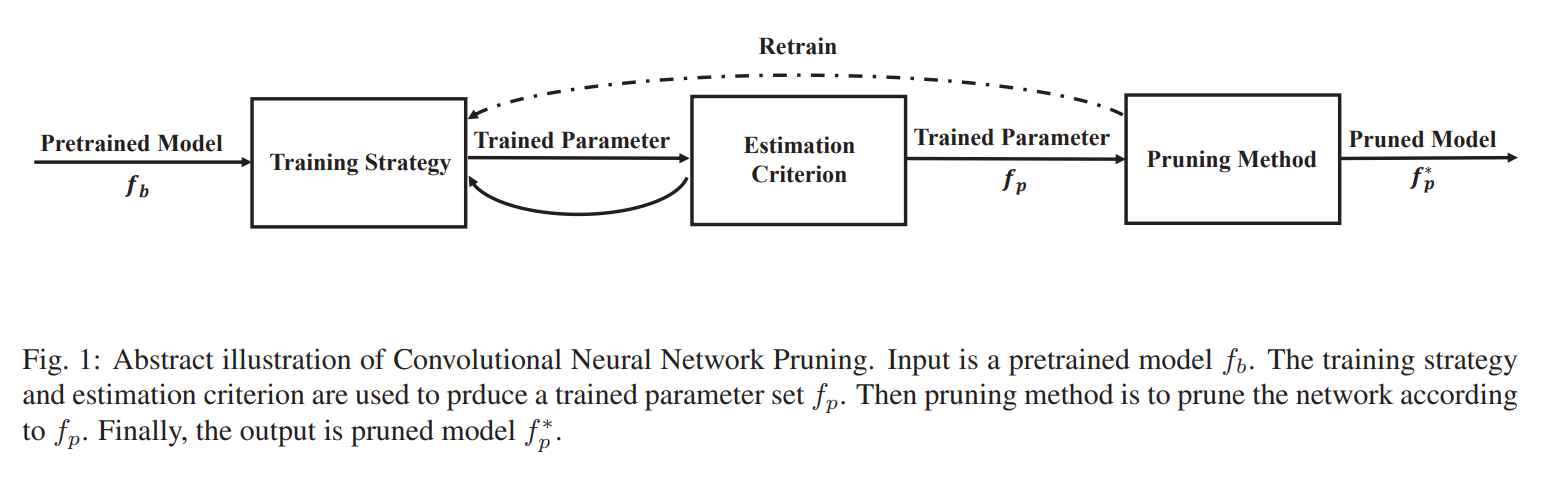

- Pruning 방법, Training 전략, 추정 기준의 3가지 차원에 따라 분류될 수 있다.

Key Words : Convolutional neural networks, machine intelligence, pruning method, training strategy, estimation citerion

Introduction

- 성능을 향상시키기 위해 더 깊고 넓은 네트워크가 설계되어 계산 능력에 대한 수요가 증가한다.

- 정확도를 향상시키려는 작업에는 많은 자원이 든다. 현대 네트워크는 높은 리소스가 필요로 한다.

- 이러한 문제를 극복하기 위해 Pruning은 CNN의 계산을 줄일 수 있고, CNN을 모바일 및 임베디드 장치에서 구현할 수 있게 한다.

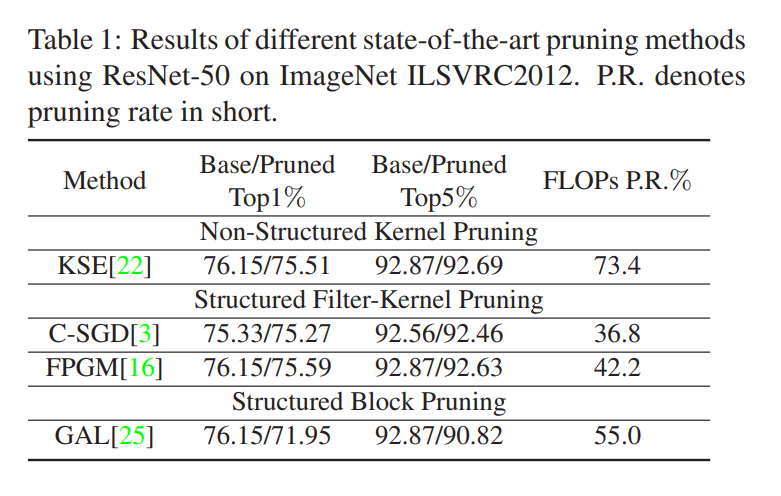

- Convolutional Neural Networkd Pruning 방법을 다음과 같이 분류한다.

- Pruning Method : 사전에 Training Strategy(훈련 전략)와 Estimation Criterion(추정 기준)을 결정한다. Pruning된 모델은 non-structured pruning과 structured pruning이 존재한다.

- Training Strategy : 매개 변수를 제거할 수 있는 매게 변수로 만들기 위해 사용되며, hard와 soft, redundant(중복) 접근법으로 분류된다.

- Estimation Criterion : 추정 기준은 여러 알고리즘을 통해 설계될 수 있다.

Pruning Method

- 본 논문에서는 Pruning 방법에 대해 알아보고, 두 가지 방법으로 분류한다.

Non-Structured Pruning

- 초기 CNN Pruning에 대한 연구는 모델 매개변수의 수가 많지 않기 때문에 covolution의 가중치에 집중한다.

- Pruning은 실행 중 필요한 계산의 많은 부분을 차지하는 불필요한 연결을 0으로 만든다. 이는 아키텍처 일관성을 위해 가중치는 제거되지 않고 zero화될 수 있다.

- Weight Pruning은 모든 가중치에 대한 좌표가 필요하며, 이는 현재 활용되는 모델에서 만족시키기 어렵다.

- Weight zeroizing의 주요 문제는 잘못된 weight pruning이다. 이러한 문제를 해결하기위해 connection splicing 방법을 제안한다.

- CNN은 구조적 일관성을 위해 0 행렬을 사용하여 Non-Structured Pruning와 유사하게 Pruned kernel을 나타낸다.

- Kernel Pruning은 Non-Structured Pruning Process를 크게 가속화하며 정확도와 Pruning 속도 사이의 균형을 이룰 수 있다.

- Kernel Pruning은 주로 중복되는 두 차원의 convolution kernel 제로화하는 것을 의미한다.

Structured Pruning

- Structured Pruning은 구조화된 CNN 부분을 직접 제거하여 CNN을 압축하고 속도를 높이는 동시에 다양한 딥러닝 라이브러리에서 잘 지된다.

- Convolution network에서 상대적으로 필요없는 channel을 뽑아서 어떤 구조를 통째로 날려버리는 방법이다.

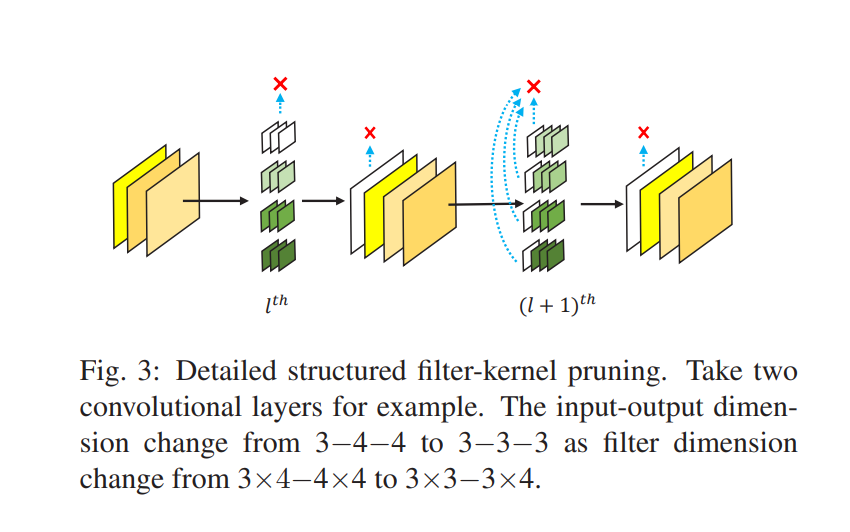

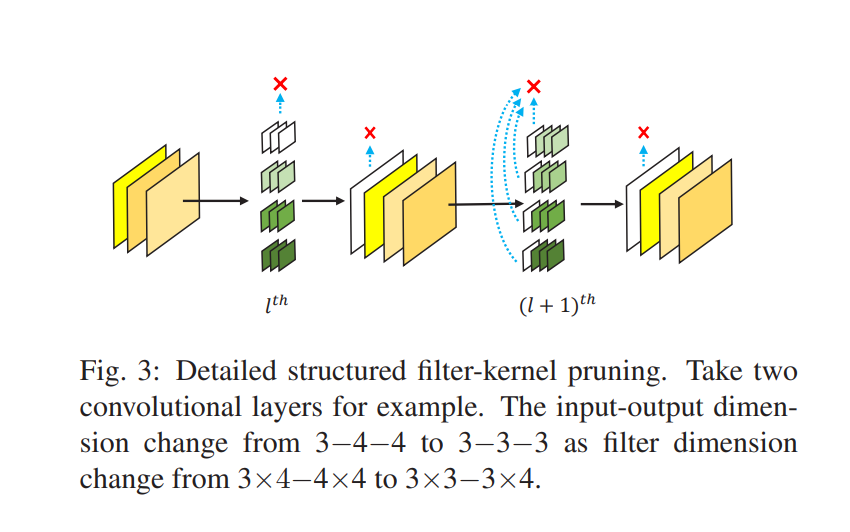

- 단일 필터 프루닝은 출력 Feature map demension을 압축한다. 또한 다음 계층의 커널은 CNN 아키텍쳐의 일관성을 유지하기 위해 제거되어야한다. 따라서 Structured Filter Pruning은 Structured Filter-Kernel Pruning이다.

- Structured Filter-Kernel Pruning은 훈련 후 뿐만아니라 훈련 중에도 구현될 수 있다.

- Filter-Kernel Pruning은 새로운 아키텍쳐를 전달할 수 있고 모든 CNN모델에서 실현 가능하기 때문에 널리 구현된다. 더욱이 효율적으로 중복성을 제거하기 때문에 큰 이점을 얻을 수 있다.

- Block Pruning은 리모델링 과정에 더 가깝다. 블록 전체를 Pruning하는 것을 목표로 0 입력을 피하기 위해 연결이 남아있는 블록만 제거할 수 있다.

- 일부 특수 아키텍쳐의 깊이 중복성을 효과적으로 제거 가능

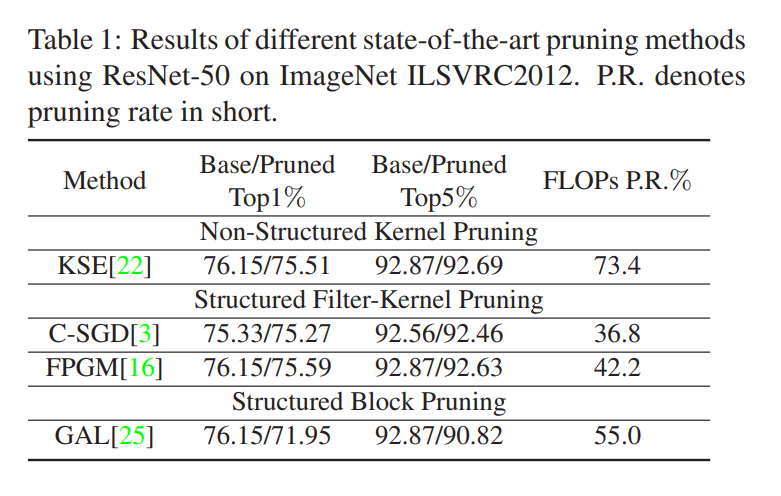

- Structured Pruning 중에서 Filter-Kernel Pruning은 Blcok Pruning보다 나은 성능을 보인다. 그러나 속도 측면에서는 Block Pruning이 우세하다.

- Filter-Kernel Pruning과 통합하여 구현할 수 있다. -> 더 높은 Pruning 속도를 실현 가

Training Strategy

Hard Pruning Strategy

- 대부분 선행 작업에서 사용된다.

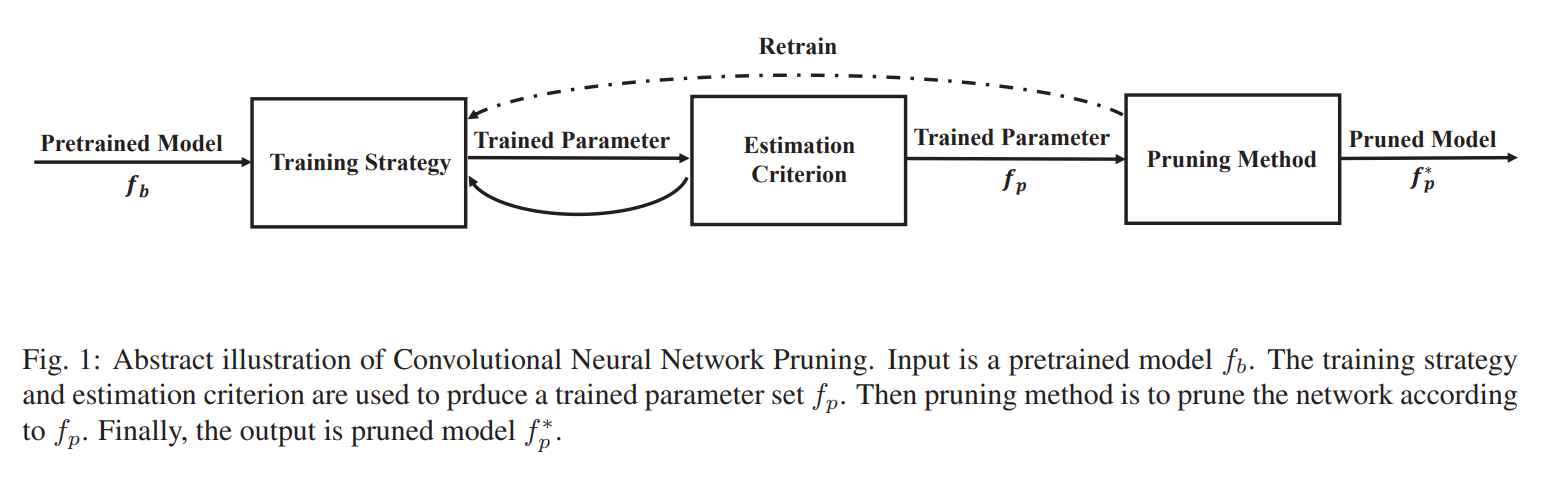

- Fig 1에서 'Retraining' 절차의 training process에서 함께 순차적으로 구현된다.

- Fig 3에서 보는 바와 같이, l 번째 계층의 첫번째 필터에 대한 첫번째 출력 피쳐맵은 공백이고, 그 다음 필터 (l+1)번째 계층의 첫번째 커널은 제거될 것이다. -> 네트워크는 왼쪽 레이어에 의해 재구성되고 정확도 회복을 위해 조정을 한다 -> 한층, 한층 훈련과 Pruning이 동시에 끝난다.

- 따라서 Hard Pruning Strategy는 Structured Pruning에서만 사용할 수 있다.

- 최적의 타겟에 대한 reconstruction error를 사용하여 hard pruning 중 최고의 성능을 얻는다.

- Hard Pruning은 반복적인 미세 조정 프로세스에 대해 시간 소모가 크다.

Soft Pruning Strategy

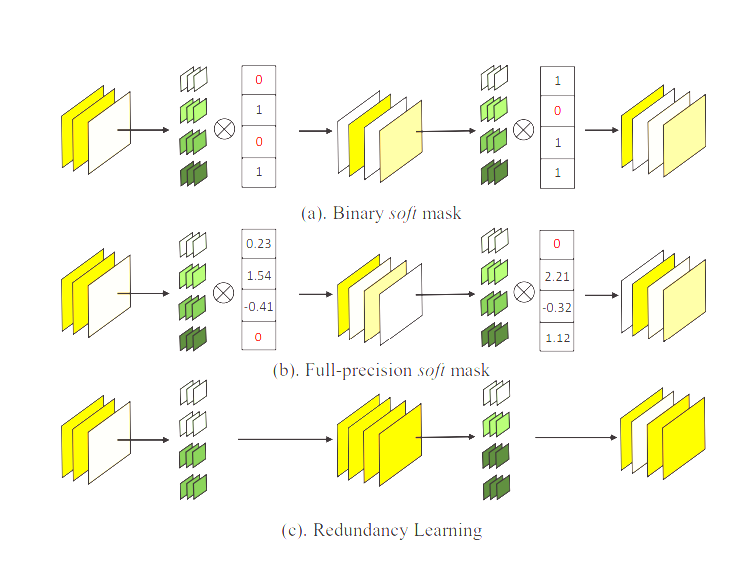

- non-structured weight pruning에서 불필요한 가중치를 대체하기 위해 가중치를 Pruning한다.

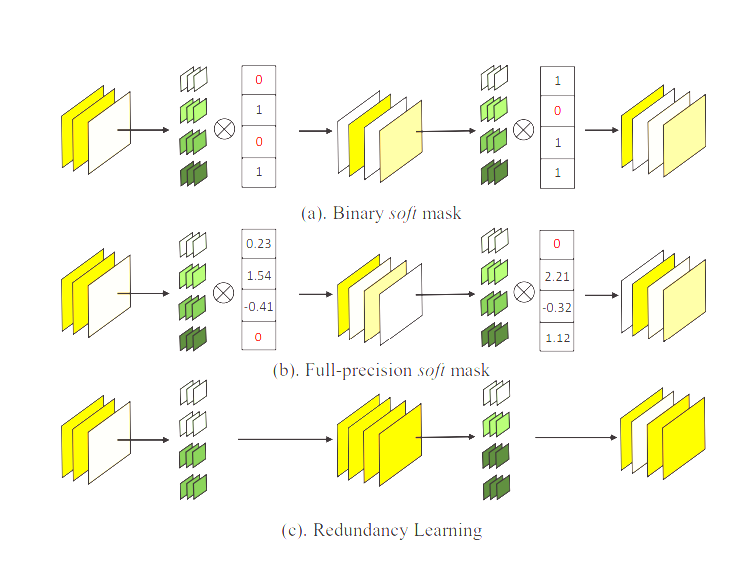

- Fig 4 (a)와 (b)에 표시된 것처럼 Filter-Kernel Pruning 모델을 훈련하기 위해 Conv layer 뒤에 사용된다.

Redundant Pruning Strategy

- 이전 작업들은 대부분 중복 매개 변수를 제로화하는 것을 기반으로 한다.

- 중복 학습으로 인한 새로운 이론이 제안되는데, Fig 4의 (c)에 표시된 것 처럼 유사한 필터를 동일하게 만들고, 중복 필터를 다듬는 것을 목표로 한다.

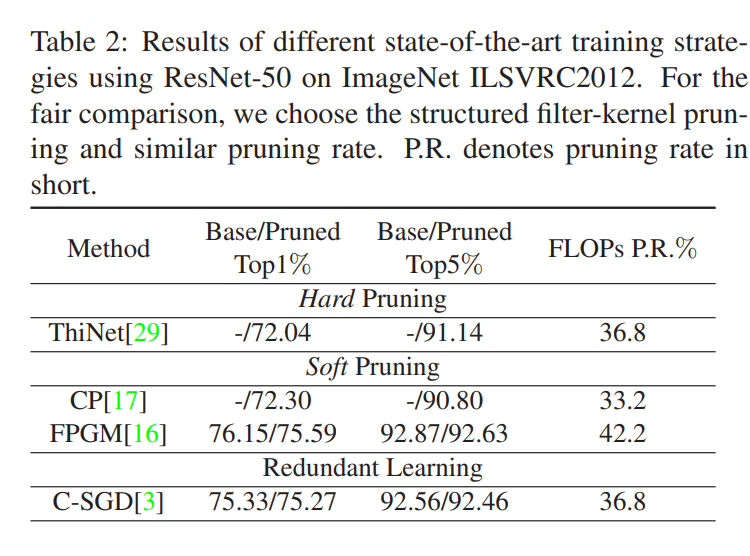

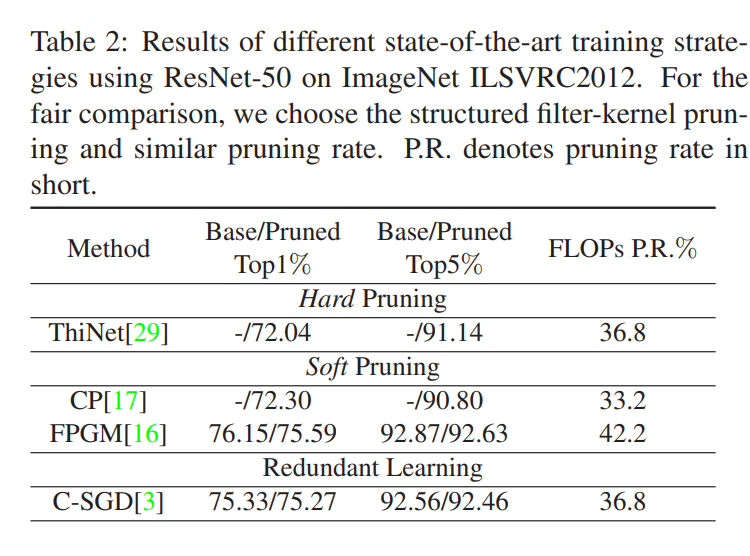

- Table 2처럼 Soft pruning 및 Redundant Pruning은 Hard Pruning와 대조적으로 최상의 성능에 도달이 가능하다.

- Hard Pruning는 두 가지보다 더 많은 훈련 시간이 소모된다.

Estimation Criterion

- 추정 기준을 Importance-based, reconstruction-based, sparsity-based 기반의 세 가지 클래스로 분류된다.

Importance-based Criterion

- 보편적인 Criterion은 더 큰 norm이 더 많은 정보를 포함한다. 따라서 더 작은 표준값으로 매개 변수를 다듬는 경향이 있다.

- Importance-based Criterion는 빠른 계산을 달성하기 위해 널리 사용된다.

Sparsity-based Criterion

- 더 높은 차원의 정보에 초점을 맞춘다.

- 유사한 정보를 공유하는 필터를 제거하는 것을 목표로하여, 이는 더 적은 매개 변수와 추출 능력이 있다.

- Sparsity-based Criterion을 기반으로 한 레이어에서 필터를 희소화하는 Filter-Scanner Pruning 기법도 있다.

- 이러한 종류의 방법은 Non-structured and Structured Pruning에서 가장 낮은 성능 저하를 달성했다.\

Reconstruction-based Criterion

- 위 두 가지 유형과 달리 Reconstruction-based Criterion은 output feature map에 직접 초점을 둔다. 주요 아이디어는 Reconstruction error를 최소화하는 것이다. 따라서 Proning된 모델은 사전 훈련된 모델에 대한 최적의 근사치가 될 수 있다.

- 출력에 덜 중요한 필터를 찾기 위해 Greedy 탐색을 제안한다.

- Tabel 3처럼 Importance-based Criterion 및 Sparsity-based Criterion은 Reconstruction-based Criterion보다 더 나은 성능을 달성할 수 있다.

'Paper Review' 카테고리의 다른 글

[논문리뷰]

ABSTRACT

- Deep Convolutional neural networks는 지난 몇 년동안 다양한 분야에서 발전을 가능하게 했다.

- Deep Convolutional neural networks은 많은 매개변수와 float operation으로 인해 여전히 어려운 과제로 남아있다.

- 따라서 이러한 Convolutional neural network의 Pruning작업에 관심이 높아지고 있다.

- Pruning 방법, Training 전략, 추정 기준의 3가지 차원에 따라 분류될 수 있다.

Key Words : Convolutional neural networks, machine intelligence, pruning method, training strategy, estimation citerion

Introduction

- 성능을 향상시키기 위해 더 깊고 넓은 네트워크가 설계되어 계산 능력에 대한 수요가 증가한다.

- 정확도를 향상시키려는 작업에는 많은 자원이 든다. 현대 네트워크는 높은 리소스가 필요로 한다.

- 이러한 문제를 극복하기 위해 Pruning은 CNN의 계산을 줄일 수 있고, CNN을 모바일 및 임베디드 장치에서 구현할 수 있게 한다.

- Convolutional Neural Networkd Pruning 방법을 다음과 같이 분류한다.

- Pruning Method : 사전에 Training Strategy(훈련 전략)와 Estimation Criterion(추정 기준)을 결정한다. Pruning된 모델은 non-structured pruning과 structured pruning이 존재한다.

- Training Strategy : 매개 변수를 제거할 수 있는 매게 변수로 만들기 위해 사용되며, hard와 soft, redundant(중복) 접근법으로 분류된다.

- Estimation Criterion : 추정 기준은 여러 알고리즘을 통해 설계될 수 있다.

Pruning Method

- 본 논문에서는 Pruning 방법에 대해 알아보고, 두 가지 방법으로 분류한다.

Non-Structured Pruning

- 초기 CNN Pruning에 대한 연구는 모델 매개변수의 수가 많지 않기 때문에 covolution의 가중치에 집중한다.

- Pruning은 실행 중 필요한 계산의 많은 부분을 차지하는 불필요한 연결을 0으로 만든다. 이는 아키텍처 일관성을 위해 가중치는 제거되지 않고 zero화될 수 있다.

- Weight Pruning은 모든 가중치에 대한 좌표가 필요하며, 이는 현재 활용되는 모델에서 만족시키기 어렵다.

- Weight zeroizing의 주요 문제는 잘못된 weight pruning이다. 이러한 문제를 해결하기위해 connection splicing 방법을 제안한다.

- CNN은 구조적 일관성을 위해 0 행렬을 사용하여 Non-Structured Pruning와 유사하게 Pruned kernel을 나타낸다.

- Kernel Pruning은 Non-Structured Pruning Process를 크게 가속화하며 정확도와 Pruning 속도 사이의 균형을 이룰 수 있다.

- Kernel Pruning은 주로 중복되는 두 차원의 convolution kernel 제로화하는 것을 의미한다.

Structured Pruning

- Structured Pruning은 구조화된 CNN 부분을 직접 제거하여 CNN을 압축하고 속도를 높이는 동시에 다양한 딥러닝 라이브러리에서 잘 지된다.

- Convolution network에서 상대적으로 필요없는 channel을 뽑아서 어떤 구조를 통째로 날려버리는 방법이다.

- 단일 필터 프루닝은 출력 Feature map demension을 압축한다. 또한 다음 계층의 커널은 CNN 아키텍쳐의 일관성을 유지하기 위해 제거되어야한다. 따라서 Structured Filter Pruning은 Structured Filter-Kernel Pruning이다.

- Structured Filter-Kernel Pruning은 훈련 후 뿐만아니라 훈련 중에도 구현될 수 있다.

- Filter-Kernel Pruning은 새로운 아키텍쳐를 전달할 수 있고 모든 CNN모델에서 실현 가능하기 때문에 널리 구현된다. 더욱이 효율적으로 중복성을 제거하기 때문에 큰 이점을 얻을 수 있다.

- Block Pruning은 리모델링 과정에 더 가깝다. 블록 전체를 Pruning하는 것을 목표로 0 입력을 피하기 위해 연결이 남아있는 블록만 제거할 수 있다.

- 일부 특수 아키텍쳐의 깊이 중복성을 효과적으로 제거 가능

- Structured Pruning 중에서 Filter-Kernel Pruning은 Blcok Pruning보다 나은 성능을 보인다. 그러나 속도 측면에서는 Block Pruning이 우세하다.

- Filter-Kernel Pruning과 통합하여 구현할 수 있다. -> 더 높은 Pruning 속도를 실현 가

Training Strategy

Hard Pruning Strategy

- 대부분 선행 작업에서 사용된다.

- Fig 1에서 'Retraining' 절차의 training process에서 함께 순차적으로 구현된다.

- Fig 3에서 보는 바와 같이, l 번째 계층의 첫번째 필터에 대한 첫번째 출력 피쳐맵은 공백이고, 그 다음 필터 (l+1)번째 계층의 첫번째 커널은 제거될 것이다. -> 네트워크는 왼쪽 레이어에 의해 재구성되고 정확도 회복을 위해 조정을 한다 -> 한층, 한층 훈련과 Pruning이 동시에 끝난다.

- 따라서 Hard Pruning Strategy는 Structured Pruning에서만 사용할 수 있다.

- 최적의 타겟에 대한 reconstruction error를 사용하여 hard pruning 중 최고의 성능을 얻는다.

- Hard Pruning은 반복적인 미세 조정 프로세스에 대해 시간 소모가 크다.

Soft Pruning Strategy

- non-structured weight pruning에서 불필요한 가중치를 대체하기 위해 가중치를 Pruning한다.

- Fig 4 (a)와 (b)에 표시된 것처럼 Filter-Kernel Pruning 모델을 훈련하기 위해 Conv layer 뒤에 사용된다.

Redundant Pruning Strategy

- 이전 작업들은 대부분 중복 매개 변수를 제로화하는 것을 기반으로 한다.

- 중복 학습으로 인한 새로운 이론이 제안되는데, Fig 4의 (c)에 표시된 것 처럼 유사한 필터를 동일하게 만들고, 중복 필터를 다듬는 것을 목표로 한다.

- Table 2처럼 Soft pruning 및 Redundant Pruning은 Hard Pruning와 대조적으로 최상의 성능에 도달이 가능하다.

- Hard Pruning는 두 가지보다 더 많은 훈련 시간이 소모된다.

Estimation Criterion

- 추정 기준을 Importance-based, reconstruction-based, sparsity-based 기반의 세 가지 클래스로 분류된다.

Importance-based Criterion

- 보편적인 Criterion은 더 큰 norm이 더 많은 정보를 포함한다. 따라서 더 작은 표준값으로 매개 변수를 다듬는 경향이 있다.

- Importance-based Criterion는 빠른 계산을 달성하기 위해 널리 사용된다.

Sparsity-based Criterion

- 더 높은 차원의 정보에 초점을 맞춘다.

- 유사한 정보를 공유하는 필터를 제거하는 것을 목표로하여, 이는 더 적은 매개 변수와 추출 능력이 있다.

- Sparsity-based Criterion을 기반으로 한 레이어에서 필터를 희소화하는 Filter-Scanner Pruning 기법도 있다.

- 이러한 종류의 방법은 Non-structured and Structured Pruning에서 가장 낮은 성능 저하를 달성했다.\

Reconstruction-based Criterion

- 위 두 가지 유형과 달리 Reconstruction-based Criterion은 output feature map에 직접 초점을 둔다. 주요 아이디어는 Reconstruction error를 최소화하는 것이다. 따라서 Proning된 모델은 사전 훈련된 모델에 대한 최적의 근사치가 될 수 있다.

- 출력에 덜 중요한 필터를 찾기 위해 Greedy 탐색을 제안한다.

- Tabel 3처럼 Importance-based Criterion 및 Sparsity-based Criterion은 Reconstruction-based Criterion보다 더 나은 성능을 달성할 수 있다.