목차

[논문리뷰]

ABSTRACT

- Random Initialization을 사용한 일반적인 Gradient-descent 알고리즘이 Deep neural network에서 약한 성능을 내는가

- Random Initialization을 적용한 Logistic sigmoid 활성화 함수는 평균값 때문에 Deep network에 적합하지 않다.

- 상위 layer를 포화(saturation)하게 만든다

- 본 논문에서는 상당히 빠른 수렴을 가져오는 새로운 Initialization Scheme를 도입한다.

Deep Neural Networks

- 딥러닝은 추출한 특징을 이용하여 특징 계층을 학습하는 것을 목표로 하여 진행한다.

- 추출한 특징 : 낮은 수준의 Feature들의 합성을 통해 만들어진 높은 수준의 Layer로 부터 추출한 것

- 복잡한 기능을 학습하기 위해서는 고수준의 추상화를 표현할 수 있어야하며, 이를 위한 한 가지 방법이 Deep architecture의 필요성이다.

- 최근 대부분의 딥 아키텍쳐들은 비지도 사전학습의 효과로 인해 Random initialization과 gradient기반의 opimization보다 훨씬 더 잘 작동된다.

- 비지도 사전학습이 최적화 절차에서 파라미터 초기화를 더 잘하는 일종의 regularizer 역할을 한다는 것을 보여준다.

- 이것은 local minimum이 더 나은 generalization과 연관이 있다는 것을 보여준다.

- 비지도 사전 학습이나 반-표준 지도학습을 딥 아키텍쳐에 가져오는 것에 초점을 둔 대신 고전적이지만 깊고, 좋은 다중 인공 신경망이 잘못 학습될 수 있는 것에 대해 분석하는 것에 초점을 둔다.

- 각 layer들의 activation들과 gradient을 모니터링하였고, 분석한다. 이 후 activation function의 선택과 초기화 방법에 대해 평가한다.

Experimental Setting and Datasets

Online Lenaring on an Infinite Dataset

- 최근 딥 아키텍쳐를 사용한 결과는 큰 학습 세트나 온라인 학습을 진행할 떄, 비지도 학습을 통해 초기화를 하게 되면 학습에 있어 학습의 수가 늘어다고 실질적인 성능 향상이 일어난다는 것을 보였다.

- 온라인 학습은 작은 표본의 정규화에 대한 효과보다 최적화의 문제에 대해서 초점을 맞춘다

- 데이터셋에서 제한 조건을 가진 두 개의 객체로 이미지를 샘플링 하였다.

- 총 9개의 클래스로 구성

Finite Datasets

- MNIST 데이터

- CIFAR-10 데이터셋 중 10,000개를 유효성 이미지로 추출

- Small-ImageNet

Experimental Setting

- 1~5개의 hidden layer를 가진 Feed Forward ANN을 최적화 하였다.

- Layer당 1000개의 숨겨진 유닛을 가진다

- 출력 Layer은 Softmax logistic regression

- Cost function은 negative log-likelihood( $ -logP(y|x) $)를 사용함.

- 10개의 mini-batch 크기를 갖는 stocastic back-propagation을 사용하여 최적화 되었다.

- 논문에서 Hidden layer에 비선형 활성화 함수를 변경하였다

- Sigmoid, tanh, 새로 제안된 softsign($ x / (1 + |x|) $)

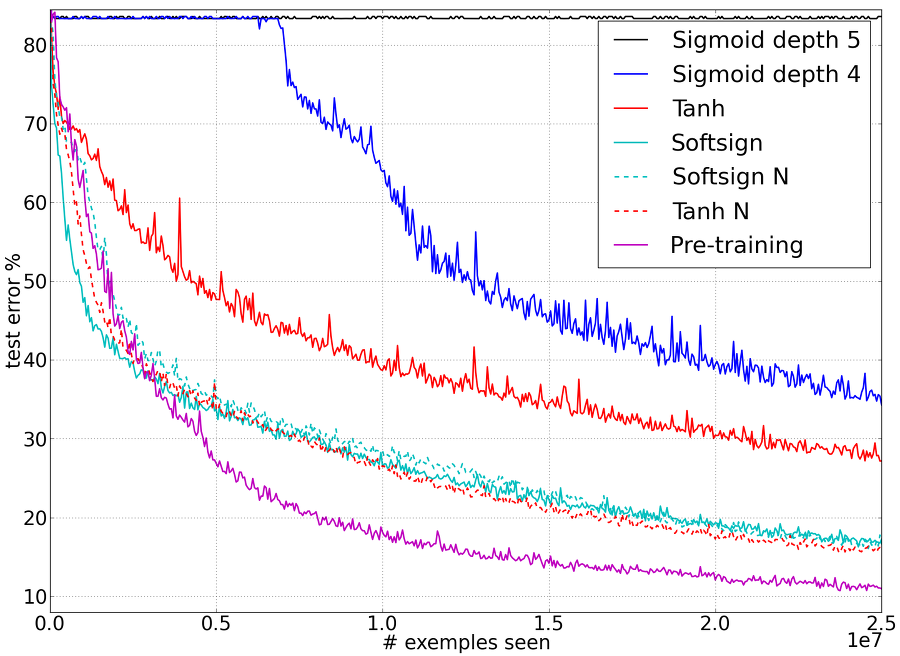

- 모델들에서 별도로 최고의 하이퍼 파라미터들을 찾았다. 각 활성화 함수 별로 결과는 Sigmoid를 제외하고는 항상 5였으며, Sigmoid는 4였다.

- biases는 0으로 초기화하고 각 layer의 가중치를 일반적으로 사용되는 휴리스틱 방법으로 초기화하였다.

Effect of Activation Functions and Saturation During Training

Experiments with the Sigmoid

- Sigmoid의 비선형성은 이미 none-zero mean으로 인해서 Hessian에서 특이값이 발생하는 것 때문에 학습을 저하시키는 요소로 알려져있다.

- 관찰

- Layer 1은 첫번째 hidden layer의 출력을 나타내고, 그 외에 4개의 hidden layer가 있다.

- 그래프는 평균과 각각의 activation의 표준편차를 보여준다.

- Layer4(마지막 hidden layer)의 모든 sigmoid의 activation 값은 0으로 빠르게 이동한다. 하지만 반대로 다른 layer의 평균 activation값은 0. 이상이고, 출력 layer에서 입력 layer로 갈수록 이 값은 감소한다.

- sigmoid activation function을 사용한다면, 이러한 종류의 포화가 모델이 더 깊을수록 오래 지속된다. 하지만 4개의 중간 계층의 hidden layer는 이러한 포화지역을 벗어 날 수 있었다. 최상위 hidden layer가 포화값을 벗어나는 동안 입력 layer는 포화되면서 안정을 찾기 시작했다.

- 결과

- 이러한 동작은 랜덤 초기화와 0을 출력하는 히든레이어가 포화 상태인 sigmoid 함수와 일치한다.

- softmax는 처음에는 입력 이미지로부터 영향을 받은 최상위 hidden layer의 활성값 h보다 biases b에 더 의존할 것이다. 왜냐하면 h는 y를 예측하지 못하는 방식으로 변할 것이기 때문에, 아마 h와는 다르고, 조금 더 우세한 변수인 x와 지배적으로 상관관계가 있을 것이다. 따라서 오차 기울기는 W를 0으로 바꾸는 경향이 있ㅇ며, 이것은 h를 0으로 변환시키면 달성할 수 있따.

- tanh나 softsign같인 symmetric activation function 같은 경우엔 더 좋은데 왜냐면 gradient가 뒤로 흐를 수 있기 때문이다. 그러나 sigmoid의 출력을 0으로 밀게 되면 그것은 포화지역으로 이끈다.

Experiments with the Hyperbolic tangent

- hyperbolic tangent를 activation function으로 사용한 인공 신경망은 0을 중심으로 대칭적(symmentry)이기 때문에 최상위 hidden layer의 포화 문제를 겪지 않는다.

- 하지만 표준 가중치 초기화인 $ U[-1/\sqrt{n}, 1/\sqrt{n}] $를 사용하게 되면 layer 1에서부터 순차적으로 포화현상이 발생한다. (Fig 3)

- 위 이미지 : 학습하는 동안, activation function을 hyperbolic tangent를 사용한 인공신경망의 activation 값의 분포에 대한 백분위 점수(마커)와 표준편차(실선) -> 첫번째 hidden layer가 먼저 포화되고 두번째가 포화되는 형태를 볼 수 있다.

- 아래 이미지 : softsign을 사용한 인공신경망의 activation 값의 분포에 대한 백분위 점수(마커)와 표준편차(실선) -> 여기서 다른 layer들은 더 적게 포화되고 함꼐 결합한다.

Experiments with the Softsign

- Softsign은 hyperbolic tangent와 유사하지만 지수항이 아닌 다항식으로 인해서 포화의 관점에서 다르게 동작할 수 있다. Fig 3에서 볼 수 있듯이, 포화가 하나의 layer에서 다른 layer로 발생하지 않는 다는 것을 알 수 있다.

- Softsign은 처음에는 빠르고, 조금 지나면 느려진다. 그리고 모든 layer는 큰 가중치 값으로 이동한다.

- 평평한 지역은 비선형성이 있지만, gradient가 잘 흐를 수 있는 지역이다.

- 위 이미지 : hyperbolic tangent, 낮은 layer의 포화 상태를 볼 수 있다.

- 아래 이미지: softsign, 포화되지 않고 (-0.6 ,-0.8), (0.6 ,0.8) 주변에 분포하고 있는 많은 활성화 값들을 볼 수 있다.

Studying Gradients and their Propagation

- 로지스틱 회귀, 조건부 대수 우도 비용함수가 feed forward 환경 네트워크를 훈련하기 위해 전통적으로 사용된 2차 비용보다 훨씬 더 잘 작동된다는 것을 발견했다.

- 뒤로 신경망을 학습 시킬때마다 back-propagated gradients의 분산은 감소한다는 것을 발견하였다.

- Fig 5 : 2개의 layer를 갖는 인공신경망의 2개의 가중치의 함수로써, Cross entropy(검은색), 2차 비용(빨간색). 첫번째 레이어의 W1과 두번째 레이어의 W2

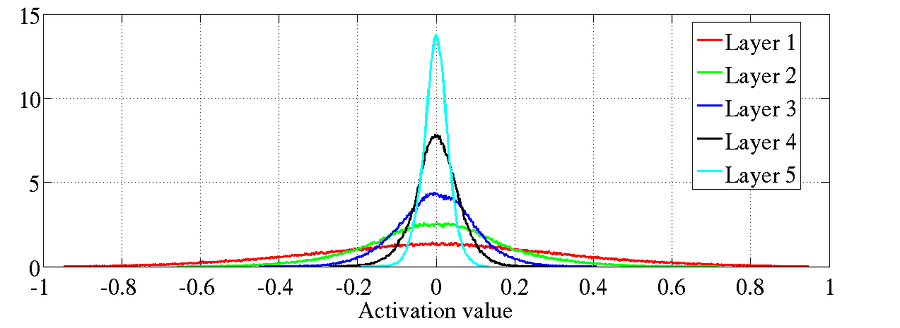

- 정규화 요소는 계층을 통한 곱셈 효과 때문에 deep network를 초기화할 때 중요하다.

- activation variances와 back-propagated gradients variance가 네트워크에서 위 아래로 움직일 수 있는 안전한 초기화 절차를 제안한다. 이것을 정규화된 초기화라고 부른다.

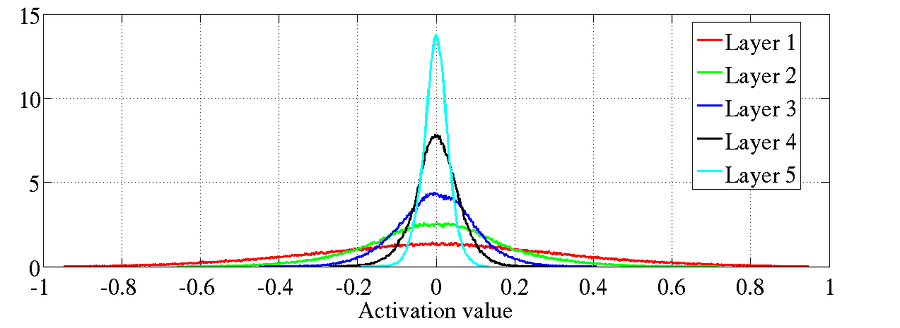

- 위 이미지 : hyperbolic tangent activation을 사용한 activation value를 정규화한 히스토그램, 표준 초기화 방법

- 아래 이미지: 정규화된 초기화 방법

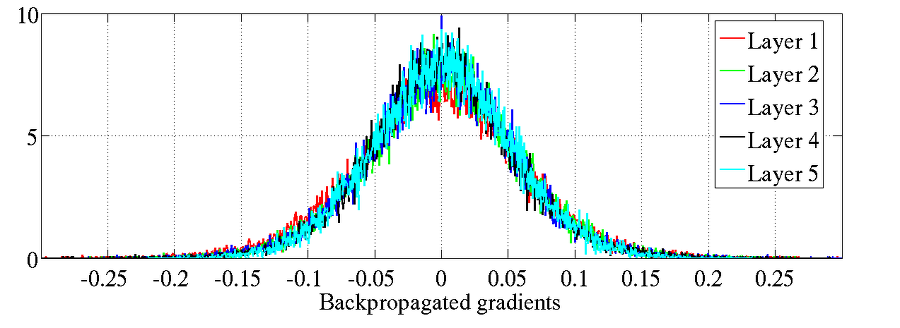

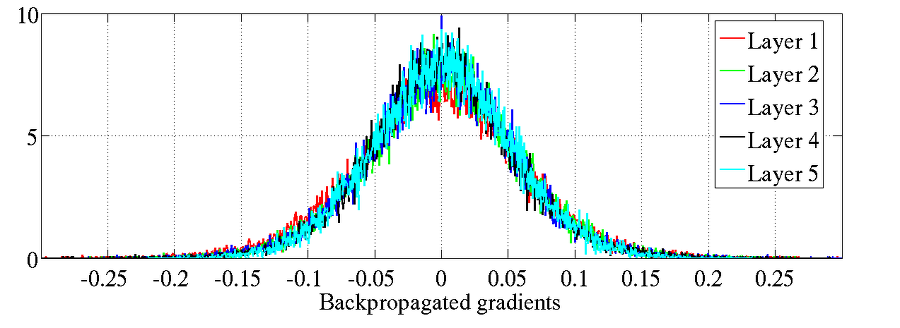

Back-propagated Gradients During Learning

- 위 이미지 : hyperbolic tangent activation을 사용한 정규화된 Back-propagated gradients 히스토그램 , 표준 초기화 방법

- 아래 이미지 : 정규화된 표준화

- Fig7에서 볼 수 있듯이 표준 초기화 이후에 진행되는 학습 초기에 back-propagated gradient의 분산이 아래로 전파됨에 따라 더 작아지는 것을 볼 수 있다. 하지만 이러한 경향이 학습하는 동안 아주 빠르게 바뀐다.

- 정규화된 초기화 방법을 사용한다면 그러한 문제를 해결할 수 있다.

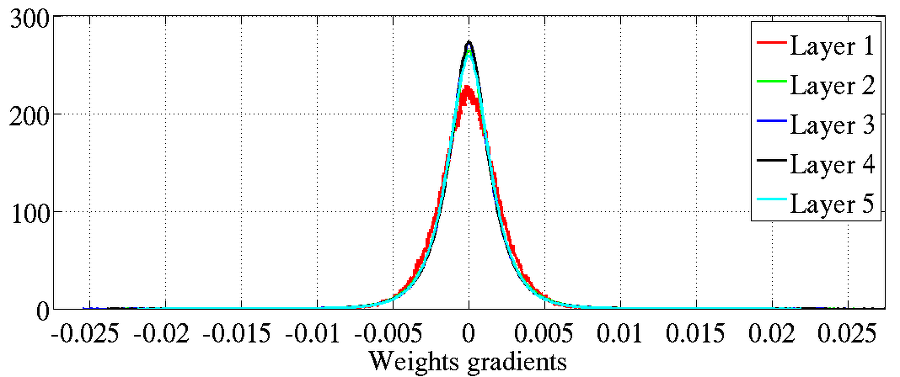

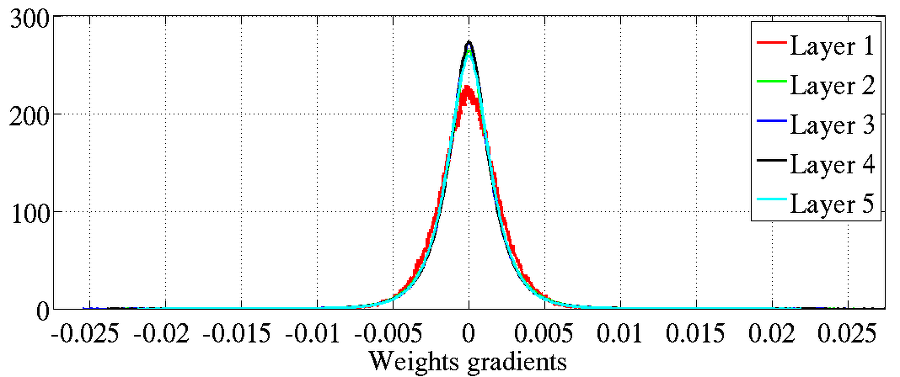

Error Curves and Conclusions

- 위 이미지 : hyperbolic tangent를 activation function으로 사용하고, 표준 초기화 기법을 사용하여 초기화한 후 weight gradient를 정규화 한 히스토그램.

- 아래 이미지 : 정규화된 초기화 기법을 각각 다른 레이어에 사용한 것

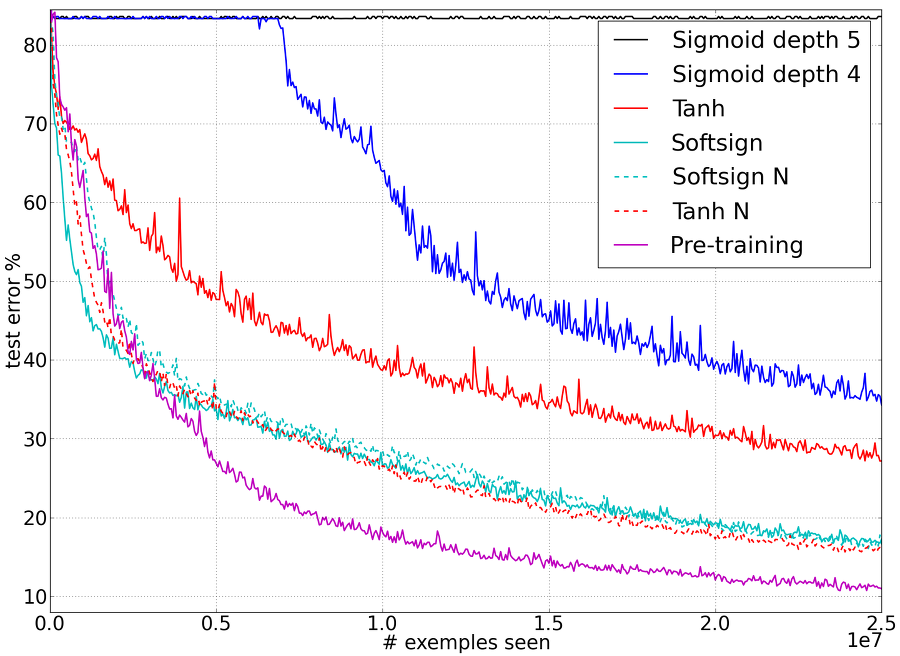

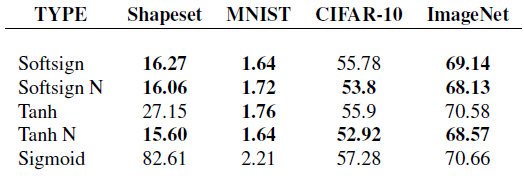

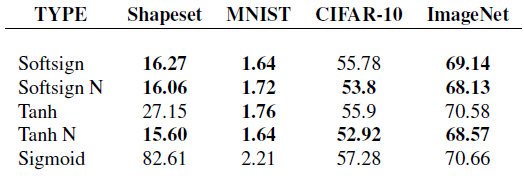

- 이 결과는 activation과 초기화의 선택에 대한 효과를 보여준다. "N"은 정규화된 초기화를 사용했다는 것을 의미

- 결론

- sigmoid나 hyperbolic tangent와 표준 초기화 방법을 사용한 전통적인 인공 신경망은 상태가 안좋다. 수렴 속도가 느리고, local minima에 취약하다

- softsign을 사용한 인공 신경망은 tanh 보다 초기화 절차에 더 강인한데, 더 부드러운 비선형성 때문일 것이다.

- tanh 네트워크의 경우, 제안된 정규화 초기화 방법을 꽤 유용하게 사용 가능하다. 레이어 간 변환이 활성화(위로 흐르는), gradient(뒤로 향하는) 크기를 유지하기 때문이다.

'Paper Review' 카테고리의 다른 글

[논문리뷰]

ABSTRACT

- Random Initialization을 사용한 일반적인 Gradient-descent 알고리즘이 Deep neural network에서 약한 성능을 내는가

- Random Initialization을 적용한 Logistic sigmoid 활성화 함수는 평균값 때문에 Deep network에 적합하지 않다.

- 상위 layer를 포화(saturation)하게 만든다

- 본 논문에서는 상당히 빠른 수렴을 가져오는 새로운 Initialization Scheme를 도입한다.

Deep Neural Networks

- 딥러닝은 추출한 특징을 이용하여 특징 계층을 학습하는 것을 목표로 하여 진행한다.

- 추출한 특징 : 낮은 수준의 Feature들의 합성을 통해 만들어진 높은 수준의 Layer로 부터 추출한 것

- 복잡한 기능을 학습하기 위해서는 고수준의 추상화를 표현할 수 있어야하며, 이를 위한 한 가지 방법이 Deep architecture의 필요성이다.

- 최근 대부분의 딥 아키텍쳐들은 비지도 사전학습의 효과로 인해 Random initialization과 gradient기반의 opimization보다 훨씬 더 잘 작동된다.

- 비지도 사전학습이 최적화 절차에서 파라미터 초기화를 더 잘하는 일종의 regularizer 역할을 한다는 것을 보여준다.

- 이것은 local minimum이 더 나은 generalization과 연관이 있다는 것을 보여준다.

- 비지도 사전 학습이나 반-표준 지도학습을 딥 아키텍쳐에 가져오는 것에 초점을 둔 대신 고전적이지만 깊고, 좋은 다중 인공 신경망이 잘못 학습될 수 있는 것에 대해 분석하는 것에 초점을 둔다.

- 각 layer들의 activation들과 gradient을 모니터링하였고, 분석한다. 이 후 activation function의 선택과 초기화 방법에 대해 평가한다.

Experimental Setting and Datasets

Online Lenaring on an Infinite Dataset

- 최근 딥 아키텍쳐를 사용한 결과는 큰 학습 세트나 온라인 학습을 진행할 떄, 비지도 학습을 통해 초기화를 하게 되면 학습에 있어 학습의 수가 늘어다고 실질적인 성능 향상이 일어난다는 것을 보였다.

- 온라인 학습은 작은 표본의 정규화에 대한 효과보다 최적화의 문제에 대해서 초점을 맞춘다

- 데이터셋에서 제한 조건을 가진 두 개의 객체로 이미지를 샘플링 하였다.

- 총 9개의 클래스로 구성

Finite Datasets

- MNIST 데이터

- CIFAR-10 데이터셋 중 10,000개를 유효성 이미지로 추출

- Small-ImageNet

Experimental Setting

- 1~5개의 hidden layer를 가진 Feed Forward ANN을 최적화 하였다.

- Layer당 1000개의 숨겨진 유닛을 가진다

- 출력 Layer은 Softmax logistic regression

- Cost function은 negative log-likelihood( $ -logP(y|x) $)를 사용함.

- 10개의 mini-batch 크기를 갖는 stocastic back-propagation을 사용하여 최적화 되었다.

- 논문에서 Hidden layer에 비선형 활성화 함수를 변경하였다

- Sigmoid, tanh, 새로 제안된 softsign($ x / (1 + |x|) $)

- 모델들에서 별도로 최고의 하이퍼 파라미터들을 찾았다. 각 활성화 함수 별로 결과는 Sigmoid를 제외하고는 항상 5였으며, Sigmoid는 4였다.

- biases는 0으로 초기화하고 각 layer의 가중치를 일반적으로 사용되는 휴리스틱 방법으로 초기화하였다.

Effect of Activation Functions and Saturation During Training

Experiments with the Sigmoid

- Sigmoid의 비선형성은 이미 none-zero mean으로 인해서 Hessian에서 특이값이 발생하는 것 때문에 학습을 저하시키는 요소로 알려져있다.

- 관찰

- Layer 1은 첫번째 hidden layer의 출력을 나타내고, 그 외에 4개의 hidden layer가 있다.

- 그래프는 평균과 각각의 activation의 표준편차를 보여준다.

- Layer4(마지막 hidden layer)의 모든 sigmoid의 activation 값은 0으로 빠르게 이동한다. 하지만 반대로 다른 layer의 평균 activation값은 0. 이상이고, 출력 layer에서 입력 layer로 갈수록 이 값은 감소한다.

- sigmoid activation function을 사용한다면, 이러한 종류의 포화가 모델이 더 깊을수록 오래 지속된다. 하지만 4개의 중간 계층의 hidden layer는 이러한 포화지역을 벗어 날 수 있었다. 최상위 hidden layer가 포화값을 벗어나는 동안 입력 layer는 포화되면서 안정을 찾기 시작했다.

- 결과

- 이러한 동작은 랜덤 초기화와 0을 출력하는 히든레이어가 포화 상태인 sigmoid 함수와 일치한다.

- softmax는 처음에는 입력 이미지로부터 영향을 받은 최상위 hidden layer의 활성값 h보다 biases b에 더 의존할 것이다. 왜냐하면 h는 y를 예측하지 못하는 방식으로 변할 것이기 때문에, 아마 h와는 다르고, 조금 더 우세한 변수인 x와 지배적으로 상관관계가 있을 것이다. 따라서 오차 기울기는 W를 0으로 바꾸는 경향이 있ㅇ며, 이것은 h를 0으로 변환시키면 달성할 수 있따.

- tanh나 softsign같인 symmetric activation function 같은 경우엔 더 좋은데 왜냐면 gradient가 뒤로 흐를 수 있기 때문이다. 그러나 sigmoid의 출력을 0으로 밀게 되면 그것은 포화지역으로 이끈다.

Experiments with the Hyperbolic tangent

- hyperbolic tangent를 activation function으로 사용한 인공 신경망은 0을 중심으로 대칭적(symmentry)이기 때문에 최상위 hidden layer의 포화 문제를 겪지 않는다.

- 하지만 표준 가중치 초기화인 $ U[-1/\sqrt{n}, 1/\sqrt{n}] $를 사용하게 되면 layer 1에서부터 순차적으로 포화현상이 발생한다. (Fig 3)

- 위 이미지 : 학습하는 동안, activation function을 hyperbolic tangent를 사용한 인공신경망의 activation 값의 분포에 대한 백분위 점수(마커)와 표준편차(실선) -> 첫번째 hidden layer가 먼저 포화되고 두번째가 포화되는 형태를 볼 수 있다.

- 아래 이미지 : softsign을 사용한 인공신경망의 activation 값의 분포에 대한 백분위 점수(마커)와 표준편차(실선) -> 여기서 다른 layer들은 더 적게 포화되고 함꼐 결합한다.

Experiments with the Softsign

- Softsign은 hyperbolic tangent와 유사하지만 지수항이 아닌 다항식으로 인해서 포화의 관점에서 다르게 동작할 수 있다. Fig 3에서 볼 수 있듯이, 포화가 하나의 layer에서 다른 layer로 발생하지 않는 다는 것을 알 수 있다.

- Softsign은 처음에는 빠르고, 조금 지나면 느려진다. 그리고 모든 layer는 큰 가중치 값으로 이동한다.

- 평평한 지역은 비선형성이 있지만, gradient가 잘 흐를 수 있는 지역이다.

- 위 이미지 : hyperbolic tangent, 낮은 layer의 포화 상태를 볼 수 있다.

- 아래 이미지: softsign, 포화되지 않고 (-0.6 ,-0.8), (0.6 ,0.8) 주변에 분포하고 있는 많은 활성화 값들을 볼 수 있다.

Studying Gradients and their Propagation

- 로지스틱 회귀, 조건부 대수 우도 비용함수가 feed forward 환경 네트워크를 훈련하기 위해 전통적으로 사용된 2차 비용보다 훨씬 더 잘 작동된다는 것을 발견했다.

- 뒤로 신경망을 학습 시킬때마다 back-propagated gradients의 분산은 감소한다는 것을 발견하였다.

- Fig 5 : 2개의 layer를 갖는 인공신경망의 2개의 가중치의 함수로써, Cross entropy(검은색), 2차 비용(빨간색). 첫번째 레이어의 W1과 두번째 레이어의 W2

- 정규화 요소는 계층을 통한 곱셈 효과 때문에 deep network를 초기화할 때 중요하다.

- activation variances와 back-propagated gradients variance가 네트워크에서 위 아래로 움직일 수 있는 안전한 초기화 절차를 제안한다. 이것을 정규화된 초기화라고 부른다.

- 위 이미지 : hyperbolic tangent activation을 사용한 activation value를 정규화한 히스토그램, 표준 초기화 방법

- 아래 이미지: 정규화된 초기화 방법

Back-propagated Gradients During Learning

- 위 이미지 : hyperbolic tangent activation을 사용한 정규화된 Back-propagated gradients 히스토그램 , 표준 초기화 방법

- 아래 이미지 : 정규화된 표준화

- Fig7에서 볼 수 있듯이 표준 초기화 이후에 진행되는 학습 초기에 back-propagated gradient의 분산이 아래로 전파됨에 따라 더 작아지는 것을 볼 수 있다. 하지만 이러한 경향이 학습하는 동안 아주 빠르게 바뀐다.

- 정규화된 초기화 방법을 사용한다면 그러한 문제를 해결할 수 있다.

Error Curves and Conclusions

- 위 이미지 : hyperbolic tangent를 activation function으로 사용하고, 표준 초기화 기법을 사용하여 초기화한 후 weight gradient를 정규화 한 히스토그램.

- 아래 이미지 : 정규화된 초기화 기법을 각각 다른 레이어에 사용한 것

- 이 결과는 activation과 초기화의 선택에 대한 효과를 보여준다. "N"은 정규화된 초기화를 사용했다는 것을 의미

- 결론

- sigmoid나 hyperbolic tangent와 표준 초기화 방법을 사용한 전통적인 인공 신경망은 상태가 안좋다. 수렴 속도가 느리고, local minima에 취약하다

- softsign을 사용한 인공 신경망은 tanh 보다 초기화 절차에 더 강인한데, 더 부드러운 비선형성 때문일 것이다.

- tanh 네트워크의 경우, 제안된 정규화 초기화 방법을 꽤 유용하게 사용 가능하다. 레이어 간 변환이 활성화(위로 흐르는), gradient(뒤로 향하는) 크기를 유지하기 때문이다.